HireWise

Redefining “Fit” with Equity in Mind

Platform: Web

Tools: Figma, FigJam, Google Docs, Zoom

Role: UX Designer (Research, Prototyping, Testing)

Duration: 4 weeks - Team Project, August 2025

Challenge

Hiring often relies on vague ideas of “culture fit” that unintentionally create bias and reduce diversity. Existing applicant tracking systems (ATS) and AI tools were efficient but lacked transparency, inclusivity, and accessibility. We wanted to explore how design could help recruiters hire fairly and confidently, while still meeting business pressures to hire quickly.

Our team asked: How might we design an AI-driven tool that gives HR teams visibility and control, while encouraging equity and fairness into their hiring workflow?

Process

Research and insights:

We interviewed job seekers, their frustrations were centered on bias and lack of feedback.

A Stakeholder pivot:

Collaborated with peers and instructor (acting as PM) to pivot project focus, aligning usability tests with business goals.

Shifted our focus from job seekers to HR teams when we identified who were the main agents of change.

Current competitive scan tools like HireVue and Harver showed emphasis on speed, not fairness.

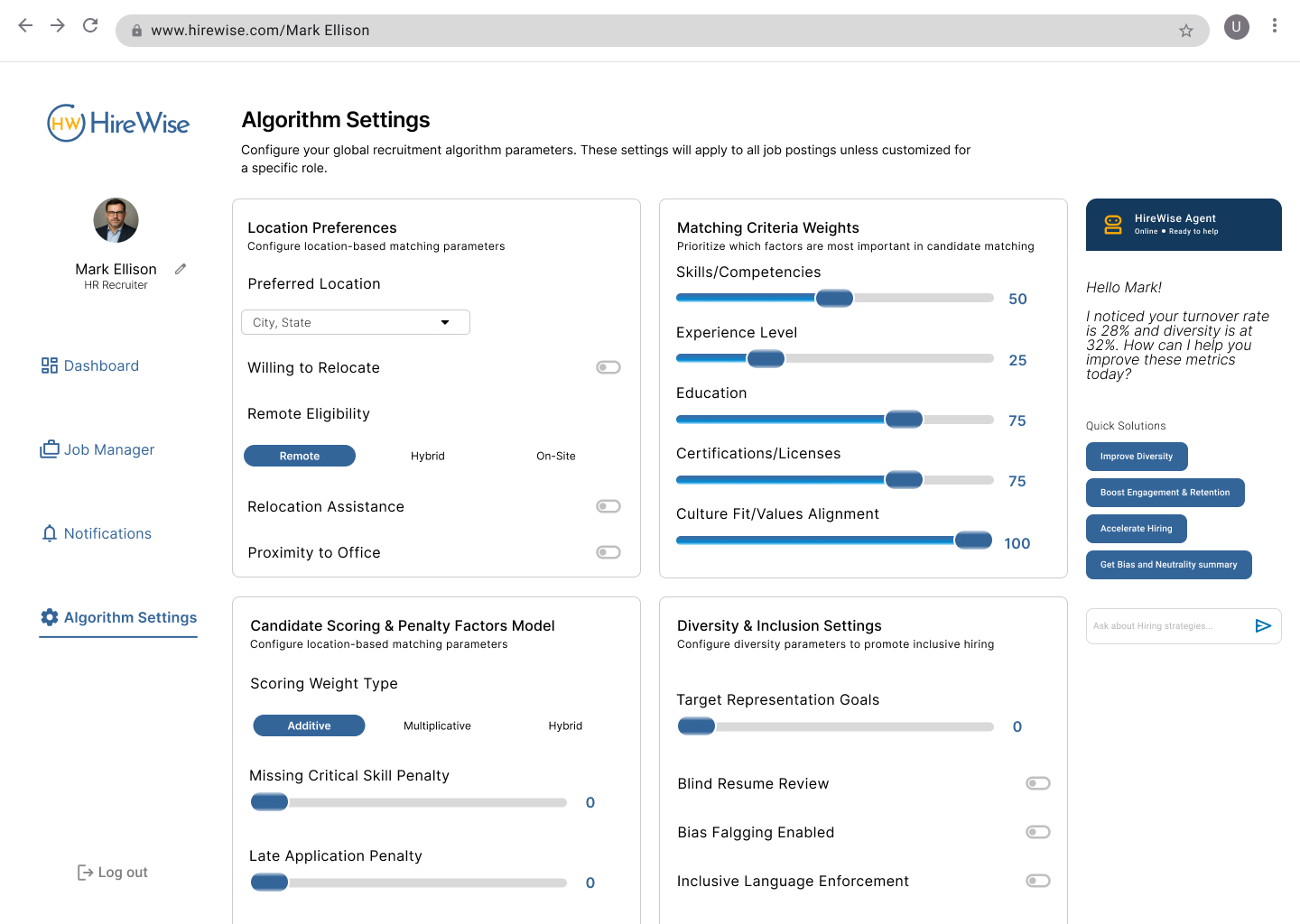

I developed a persona:

Mark Ellison, Director of Talent Acquisition:

Goals: attract diverse talent, reduce turnover, hire quickly

Struggles: resume overload, limited AI literacy, high pressure to deliver

Need: guidance on inclusive hiring without jargon or extra steps

Design and Testing

I, with another teammate designed multiple concepts:

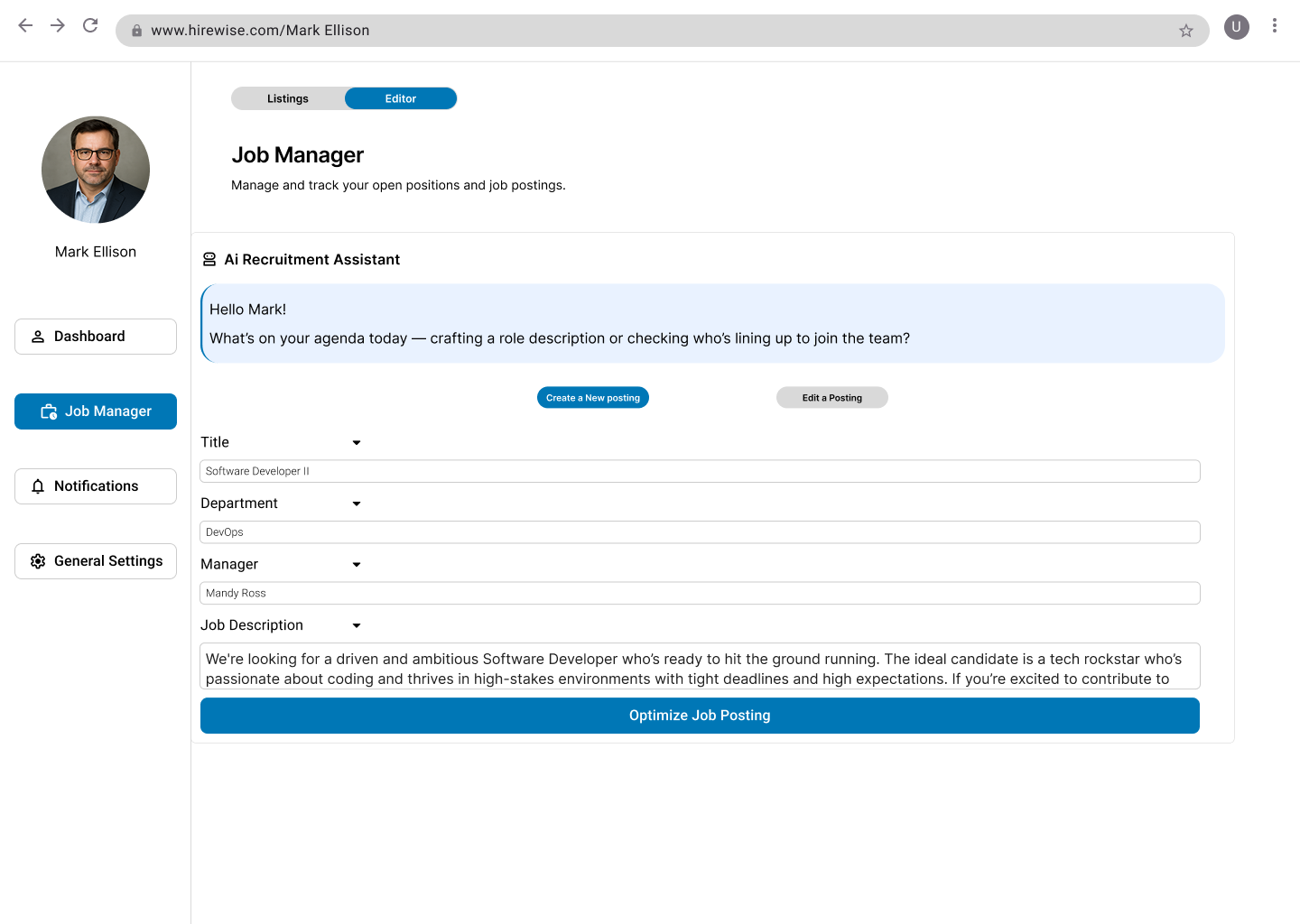

Wireframes: job posting flow and recruiter dashboard concepts

AI assistant prototype: provided inclusive language prompts, equity scoring and bias aware job description suggestions, to help guide recruiters.

Usability testing: 5 HR recruiting professionals

Methods included, think aloud protocols, task scenarios, post test interviews

Iterations based on feedback

Simplified crowded AI suggestions.

Grouped quick actions for clarity.

Added tooltips and clearer hierarchy.

Improved accessibility through spacing, and control states.

Outcomes

User feedback

Recruiters found the interface easy to use and appreciated the ethical focus.

AI helper was described as “helpful and neat” for crafting inclusive language.

Users liked fairness reminders but asked for more control and transparency.

Prototype showed how equity can be part of the workflow, not an afterthought.

What I learned

Clarity beats complexity: simple, well, organized AI suggestions built trust.

Transparency is critical: Recruiters wanted to understand how equity scores were generated.

Iteration matters: Small changes in wording and layout improved confidence in the design and made it feel more professional.

Next Steps:

If developed further:

Strengthen accessibility with WCAG standards.

Increase algorithm transparency through clearer settings.

Measure impact with metrics such as recruiter confidence, time saved per posting, and diversity of hires.